Introduction

Recently I launched a personal project, the Mojang blacklist check, expecting that a few people might use it. But to my surprise, it quickly gained significant traction, with over 1,000 daily users within the first week.

However, this sudden popularity brought an unexpected challenge: the costs associated with Cloudflare D1 started to skyrocket.

The Cost with Cloudflare D1

Cloudflare D1's pricing is extremely generous, with the paid plan including 25 billion monthly rows (read) and 50 million monthly writes for just $5. When I first started the blacklist tool project, I looked at these numbers and thought, "There's no way I'll ever hit those."

But, as usual, the law of exponential numbers once again bit me in the rear.

Because every time the tool "refreshes", it needs to do a full DIFF against the Mojang blacklist, which is about 2,500 rows. Do this every 15 minutes, and you're already up to 7.2 million reads per month.

This doesn't even include the actual usage of the tool, which has the same terrible scaling characteristics. Every time the page loads, it compiles a full history to seed the user with context. And on top of that, every time a new test is done, a handful of variants are generated (to check for subdomains, evasion, DNS info, etc.), and each of these variants needs to be hashed and then checked against the database.

And before you know it, you're hit with this:

CF bill

CF bill

Sure, it's not a massive amount of money, but for a student like me, it's a lot to throw at a free project.

Cloudflare Comes to the Rescue... Again

Who's better at solving Cloudflare problems than Cloudflare themselves? Of course, they would love for you to stay in their ecosystem (and I'm happy to oblige because their stuff is great).

You can already open a manual TCP connection from a worker, letting you implement any protocol you want, and thanks to some amazing OSS work by Brianc, we have node-postgres, which has native support for Cloudflare's TCP sockets.

There's not really much more you need than this, because from here on out, it's just regular Postgres. But you also lose a lot of the "edge" benefits of D1. The thing that makes D1 so special is that it's a distributed database, meaning that your data is replicated across all of Cloudflare's data centers. This means that no matter where your user is located, they will always have a low-latency connection to the database.

Taking control of your own database means that the latency between the worker and database will be a lot higher, which becomes more noticeable for every query (because of the cumulative round-trip time).

Thankfully, Cloudflare lets us have the best of both worlds. Enter Cloudflare Hyperdrive.

What is Hyperdrive?

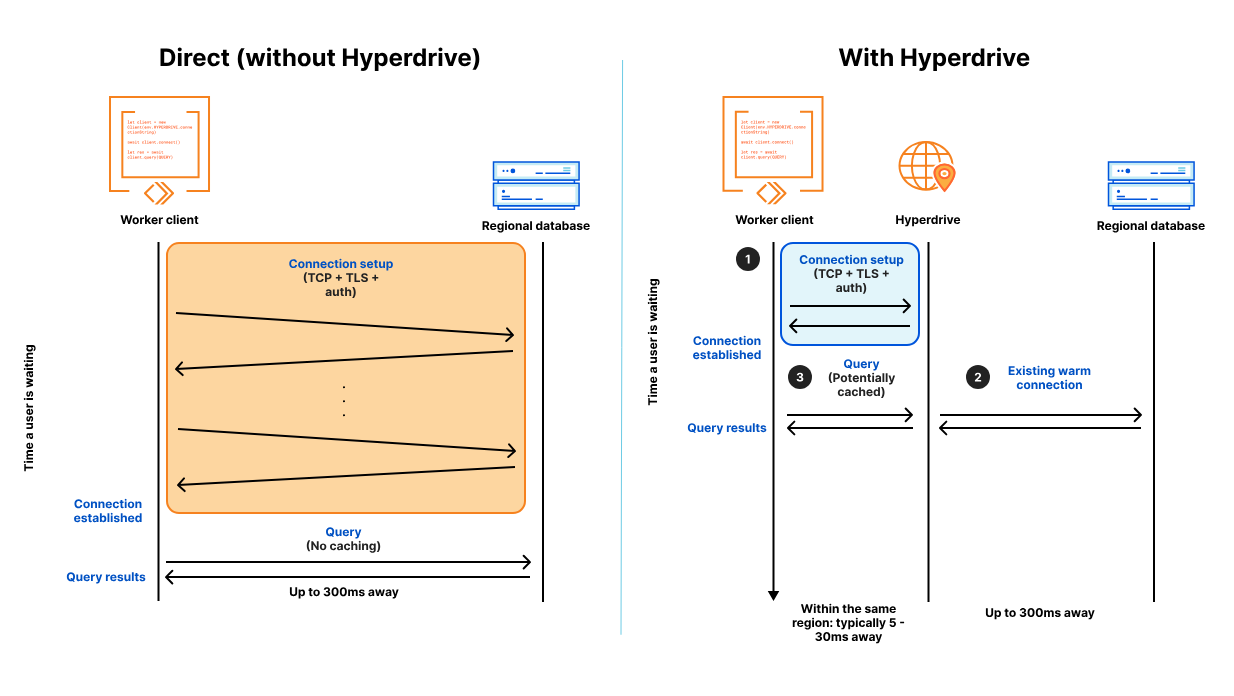

Hyperdrive looks like a regular tunnel/proxy service on the surface, but it's actually a lot cooler than that. It will man-in-the-middle your Postgres connection, and not just forward the commands, but understand the queries being done and cache them for you at the edge. This means that if you have a query that is run often, it will be cached at the edge, and subsequent requests will be served from the edge cache instead of going all the way to your database.

Hyperdrive diagram from https://developers.cloudflare.com/hyperdrive/configuration/how-hyperdrive-works/

Hyperdrive diagram from https://developers.cloudflare.com/hyperdrive/configuration/how-hyperdrive-works/

This means that you can have a single database in a single region (in my case, on a cheap Hetzner VPS) and still have low-latency access to it from all over the world. And should Hyperdrive get too expensive, you can always fall back to your database directly, albeit with higher latency. Not the best user experience, but a worthy trade-off for cost savings.

Migrating from D1 to Hyperdrive (SQLite to Postgres)

Migrating from D1 to Postgres + Hyperdrive is pretty straightforward, but does come with a major gotcha.

Though Cloudflare will happily let you export your data as a SQLite script through Wrangler, there are some architectural problems you might run into.

The most prominent one is that SQLite doesn't give a single hoot about capitalization in table and column names, while Postgres does. This, combined with pgloader being a bit finicky with non-standard table names, makes for a great recipe to waste your entire afternoon trying to figure out why your queries don't work.

To avoid these issues, I recommend the following steps:

- Export your D1 database as a SQLite script using Wrangler.

npx wrangler d1 export <database_name> --remote --output=./database.sql

- Port the SQLite script to a "fat" SQLite database using the sqlite3 CLI.

sqlite3 fat_database.db < database.sql

- Make a pgloader script to port the SQLite database to Postgres, including casing for table and column names.

- Example pgloader script:

LOAD DATABASE FROM sqlite://fat_database.db INTO postgresql://USER:PASSWORD@SERVER/DB WITH include no drop, create tables, quote identifiers ;

- Run pgloader with the script.

pgloader migrate.pgloader

- Manually go through your queries to address some inconsistencies between SQLite and Postgres.

- Make sure to quote table and column names with double quotes if they contain uppercase letters or special characters. Otherwise, Postgres will silently lowercase them, leading to "table not found" errors.

- If you're using Prisma, make sure to update your

numberfields toBigInt, as Postgresintegerfields are 4 bytes, while SQLiteintegerfields are 8 bytes.

Conclusion

The migration from D1 to Hyperdrive is now complete, and I'm cautiously optimistic about the results. While the upfront complexity of managing my own Postgres instance and dealing with SQLite-to-Postgres quirks was definitely a time investment, the potential cost savings are significant (expecting continued growth in usage).

Of course, the real test will come over the next month as I collect actual usage data and see how Hyperdrive performs under real-world conditions. Will the edge caching live up to the promise? Will the latency remain acceptable? And most importantly, will my wallet thank me?

I'll be monitoring the costs closely and will follow up with a detailed comparison once I have meaningful data. For now, if you're running into similar scaling issues with D1, Hyperdrive is definitely worth considering; just be prepared to spend an afternoon wrestling with case-sensitive table names.

Stay tuned for the results, and should my projects go down in flames, at least I'll have a good story to tell!