Lights are cool. That's just a fact. You have pretty colored static ones, you have wobbly moving ones, you have ones that can do both. You know what else has wobbly moving lights and effects? Minecraft. ImagineFun, the Minecraft disney experience I work for, has a lot of them.

And in the past few years, we've made a ton of improvements to our workflows and pipelines to make it easier for our creative folks to make cool stuff. But, shows are still made by people, and people are still people. And people are lazy. And people are forgetful. And people are bad at math. And people are bad at random numbers. This doesn't mean we're bad at making shows, but it does mean that we can make even bigger ones by tapping into the decades of experience from the real Theatre and Live Event industry. And after months of work, we've crafted a full pipeline to bring real show technology into Minecraft.

Managing expectations

Before we dive into the technical details, let's set some expectations. I won't be able to share any of our specific implementations, code, or secret sauce. I will, however, share the general concepts and ideas behind our work.

What is DMX and Art-Net?

A DMX splitter from my local student pub, taking one 3pin input, and splitting it into 6 lines for different facades

DMX is a protocol used in the lighting industry to control fixtures. It's a simple serial protocol that sends a stream of bytes (RS-485). It's been around for a long time, and it's still widely used today. You can imagine each "command" as an array, a huge list of numbers (DMX512, has 512 usable addresses, with remaining bytes being used for the sync and frame protocols) that represent the intensity of a light, the color of a light, or any other parameter you can think of.

A DMX splitter from my local student pub, taking one 3pin input, and splitting it into 6 lines for different facades

DMX is a protocol used in the lighting industry to control fixtures. It's a simple serial protocol that sends a stream of bytes (RS-485). It's been around for a long time, and it's still widely used today. You can imagine each "command" as an array, a huge list of numbers (DMX512, has 512 usable addresses, with remaining bytes being used for the sync and frame protocols) that represent the intensity of a light, the color of a light, or any other parameter you can think of.

Each fixture has an address, which is the Nth slot in this list, from where it will read a few numbers (depending on it's parameters) and parse them as it's state.

For example, let's say that we have a simple RGB light, that has 3 channels (1: red, 2: green, 3: blue) If we send the command [255, 0, 0] to the light, it will turn on at full brightness and be red. If we add a second light, then it would start listening at the 4th slot, at which point our universe would look like this: [255, 0, 0, 255, 0, 0].

You can imagine that this becomes a huge list pretty fast, mostly because most lights need far more than 3 parameters (moving lights can have 20+ channels, for all its gobos, movements, colors, etc). This is why DMX is usually split into universes, which are separate lists of 512 slots. This way, you can have a lot of lights, but you can also have a lot of parameters for each light. If one universe gets full, you can just overflow into the next one. It's not uncommon for this to get out of hand. Eurovision (2024), used a whopping 663 DMX universes.

DMX is normally transferred over a 3 (or 5) pin XLR cable, but it can also be sent over Ethernet, which is where Art-Net comes in. Art-Net is a protocol that sends DMX over IP, which is a lot more flexible than physical cables. It's also a lot easier to work with, as you can just use a normal network cable to send DMX around. And that's also where we start to differ from the real world.

Because it's a network signal, we can setup a socket to listen for Art-Net commands, and do with them whatever we want. The lighting desk/software doesn't know if it's talking to a real light or a Minecraft block, it just sends the commands and hopes that something will listen.

It's an open standard provided by Artistic License, so you can just implement it in your own software. And that's what we did.

The pipeline

We have two separate pipelines for working with Art-Net, the primary one is for the creative folks, and the secondary one is for playback.

- Primary pipeline (live)

A cobalt debug view that shows when someone is connected to the Art-Net node

When building a show, you want to see what you are programming in your lighting software, preferably in real-time. This is where the primary pipeline comes in. This runs an embedded Art-Net node in-game, which we can target through unicast and then gets injected into our rendering engine. This way, we can see the lights in-game, and we can see the lights in our lighting software. This is a huge improvement over the old way of doing things, where we had to manually convert the show into Minecraft commands or scriptable actions.

A cobalt debug view that shows when someone is connected to the Art-Net node

When building a show, you want to see what you are programming in your lighting software, preferably in real-time. This is where the primary pipeline comes in. This runs an embedded Art-Net node in-game, which we can target through unicast and then gets injected into our rendering engine. This way, we can see the lights in-game, and we can see the lights in our lighting software. This is a huge improvement over the old way of doing things, where we had to manually convert the show into Minecraft commands or scriptable actions.

- Secondary pipeline (playback)

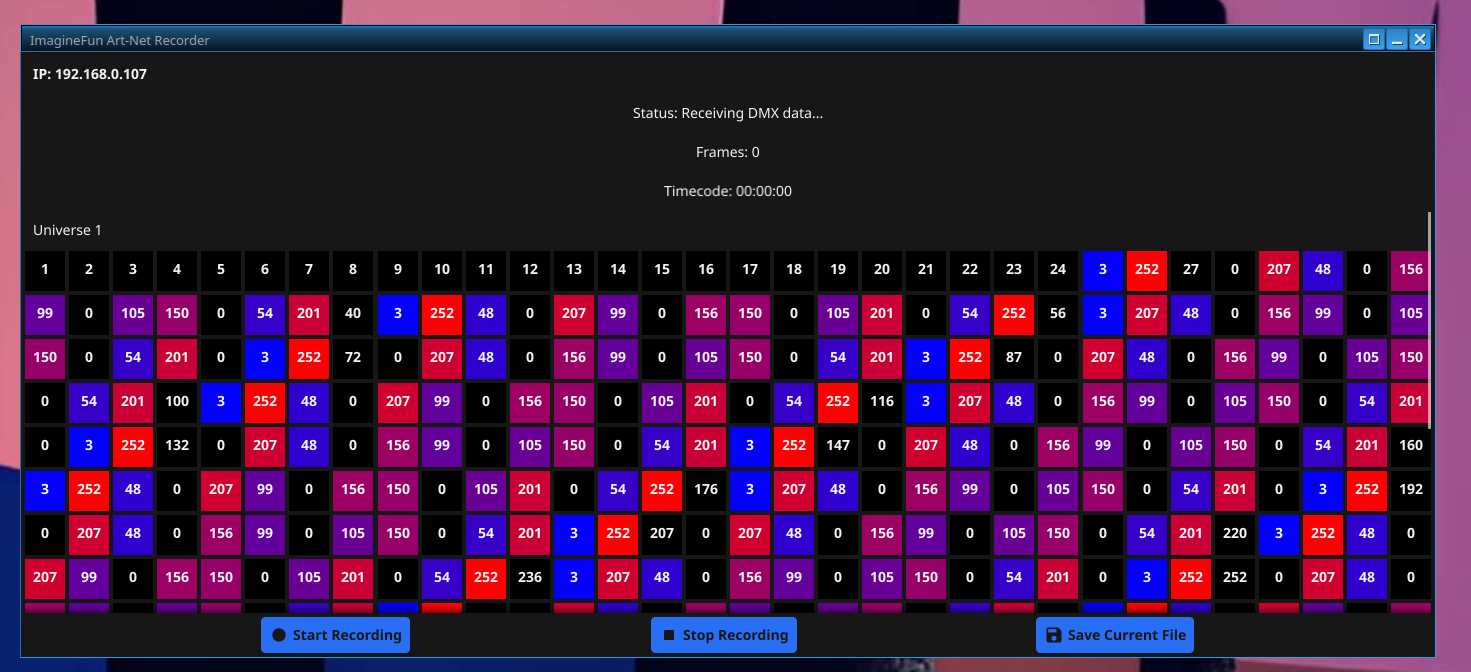

The self made Art-Net recorder with timecode support

And while this works for building shows, it doesn't work for running them. We don't want to have someone operating every show, because they run on a set schedule and we want to be able to run them without us having to get up at 3 AM. This is where the secondary pipeline comes in. We have a custom standalone application (written in Go), that also listens for Art-Net commands, but instead of rendering them in-game, it processes them, minifies every frame (only using keyframes every few seconds), and then pumps them into a file (shared protocolbuffer) that we can upload to our server.

The self made Art-Net recorder with timecode support

And while this works for building shows, it doesn't work for running them. We don't want to have someone operating every show, because they run on a set schedule and we want to be able to run them without us having to get up at 3 AM. This is where the secondary pipeline comes in. We have a custom standalone application (written in Go), that also listens for Art-Net commands, but instead of rendering them in-game, it processes them, minifies every frame (only using keyframes every few seconds), and then pumps them into a file (shared protocolbuffer) that we can upload to our server.

Then, when it's time for our night spectacles, the show can be loaded into our server, and the server will play the show back, parsing the protocolbuffer and sending the raw timecoded DMX changes to our rendering engine, the same way our primary pipeline does.

Mapping to in-game actions

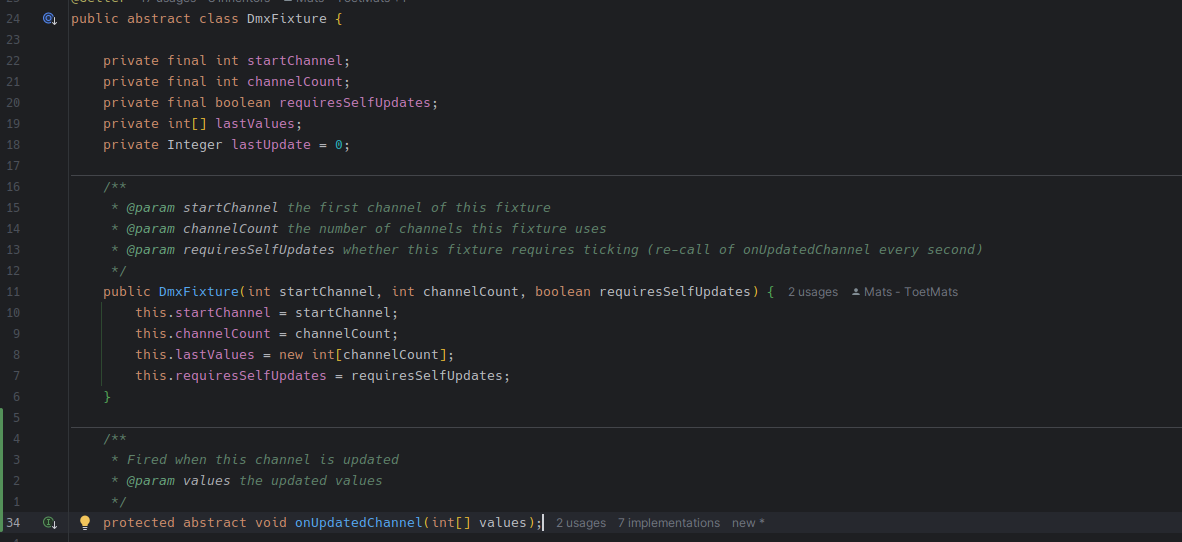

Snippet from Cobalt's abstract fixture class

We have a predefined set of virtual fixtures (like lasers, moving spots, command triggers, and even pixel-mapped LED blocks), which are all 'patched' to listen to a specific universe and address. That way, our rendering engine can take the raw DMX universe states, and pass them on to our fixtures. And this is where the magic happens. We can now control our in-game lights with a real lighting desk, and we can control our real lights with in-game actions.

Snippet from Cobalt's abstract fixture class

We have a predefined set of virtual fixtures (like lasers, moving spots, command triggers, and even pixel-mapped LED blocks), which are all 'patched' to listen to a specific universe and address. That way, our rendering engine can take the raw DMX universe states, and pass them on to our fixtures. And this is where the magic happens. We can now control our in-game lights with a real lighting desk, and we can control our real lights with in-game actions.

| Universe | Type | Start Channel | End Channel | Amount | Description |

|---|---|---|---|---|---|

| 5 | End Beam | 0 | 127 | 32 | Castle End Beams |

| 5 | Moving Head | 128 | 131 | 1 | Castle Moving Head |

| 5 | Pixel Block | 132 | 320 | 63 | Castle Matrix |

| 5 | Moving Head | 321 | 328 | 2 | Ballroom Lasers |

| 6 | Pixel Block | 0 | 62 | 20 | Decorative Pixel (Christmas Tree) |

Main castle end beams

Main castle end beams

![]() Main castle pixel mapping screen

Main castle pixel mapping screen

Conclusion

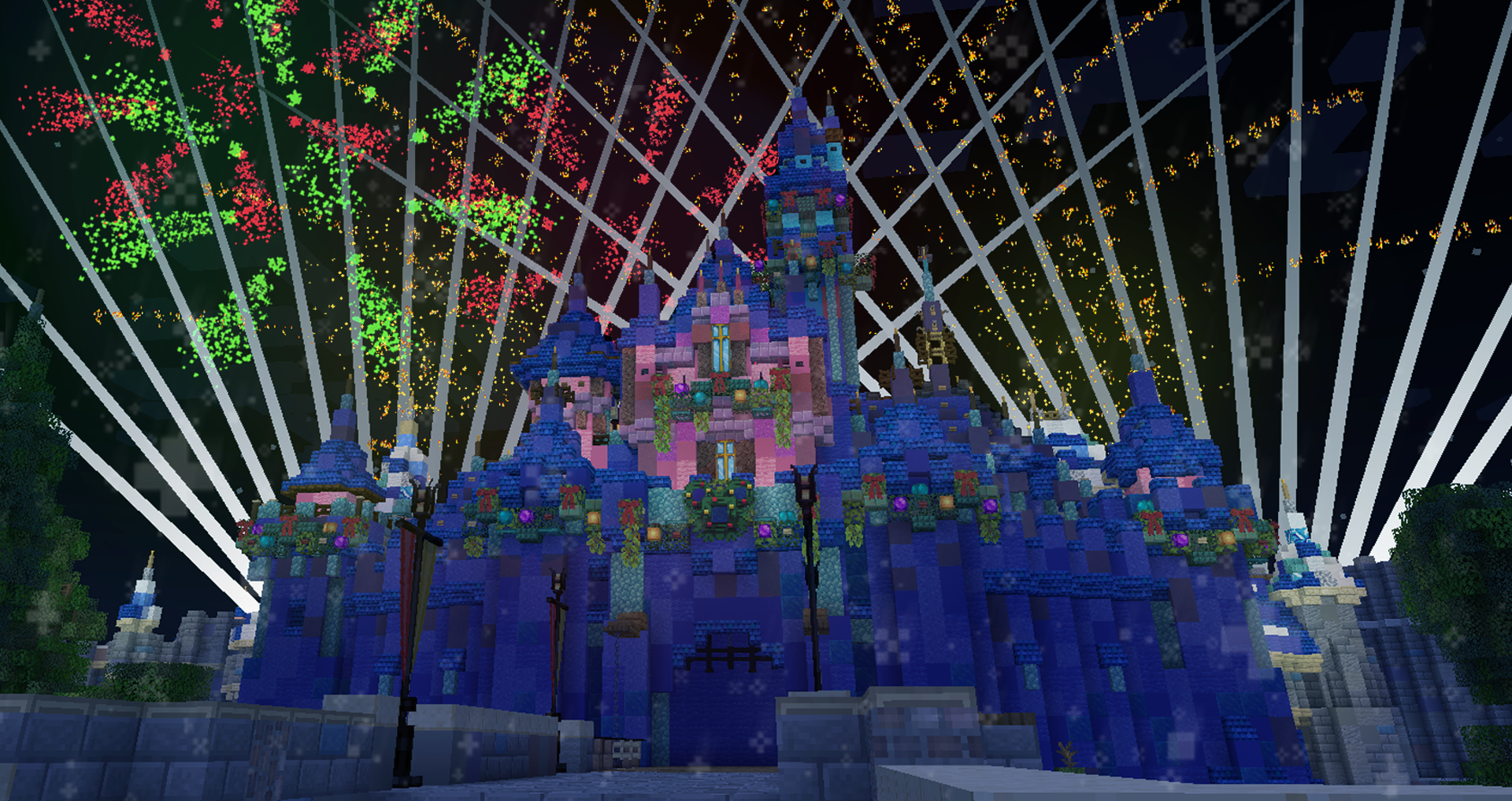

This has been a huge project for us, and it's been a lot of fun to work on. A few of us work in the real life event industry as well, and are incredibly passionate about bringing the work we love so much into the game we love even more. We've been able to make some really cool shows with this technology, and are still working on improving it every day and seeing how far we can push it to build shows on scales we've never seen before.

End product - show by SlinxMC

End product - show by SlinxMC